Website Performance - Can I Beat Dries?

This post is an introduction into a series of posts deep diving into optimizing Drupal sites for browser performance.

Shortly after I rebuilt this site, I was reading a blog post on dri.es and noticed Dries has really gone out of his way to optimize the performance and size of that site. If you haven't looked lately, it's worth taking a look to see how lightweight and fast that site is. I thought it'd be a fun challenge to see if I can make this site even smaller and faster!

Background

One of the goals of building this site was to keep it simple and performant. More and more websites are becoming way too complex and 'bloated', and I don't want to contribute to this trend.

First though, this site is empty, so why do I even have this site? I don't blog much, nor do I intend to. I really only use this site as a live test bed for demos, experiments, and evaluating different changes at Acquia. (Also everyone wants to own their-name-dot-com, and I might as well have something running on it.)

Second, one of the tenants of security is to minimize your attack surface. For this reason I really try not to add many modules to the sites I build. As of this writing this site only has a few Drupal 8 core modules, one custom module, one base theme, and a custom theme.

Lastly this site doesn't really need to do much, other than render a few pages and images, to serve its purpose. This makes it perfect for a CDN, and therefore backend server performance isn't really an issue. With respect to this challenge, everything will be about download size and browser rendering performance.

The Challenge

In order to have a challenge, rules of the game must be defined. In this case there needs be some metric that will be used and measured to be able to independently declare success or failure.

It doesn't make sense to focus on the speed of the page response from Drupal. Both sites are blogs with anonymous users, and offloading content caching to the same CDN. Just trying to measure something like the Time To First Byte (TTFB) in a blunt way like the following would be both boring and fruitless.

$ time curl -s https://dri.es > /dev/null

real 0m0.188suser 0m0.020ssys 0m0.020s

$ time curl -s https://cashwilliams.com > /dev/null

real 0m0.181suser 0m0.021ssys 0m0.016s

Real users don't actually care about TTBF. Users expect a website to load and render as fast as possible, regardless of what bottlenecks might slow down that process. This is referred to as perceived performance. Perceived performance can be hard to measure as by definition it's based on a user's perception, and can even be tricked with things like lazy loading and preloading.

With this in mind, the metric I'll be using is SpeedIndex, which can be measured using tools like webpagetest.org and is defined as:

The Speed Index metric [...] measures how quickly the page contents are visually populated (where lower numbers are better). It is particularly useful for comparing experiences of pages against each other (before/after optimizing, my site vs competitor, etc) and should be used in combination with the other metrics (load time, start render, etc) to better understand a site's performance.

So the goal of this challenge is simple - can I make this site's SpeedIndex lower than dri.es SpeedIndex?

Measuring and Baseline

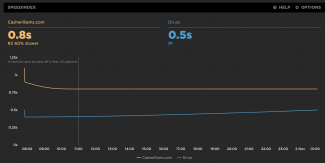

There are a few ways I could measure both this site's and dri.es SpeedIndexes. In order to easily provide tracking over time, I'm going to use a tool called SpeedCurve which is purpose built for this task. I've used and recommended it many times in the past when delivering performance audits for clients at Acquia. Most importantly its base metric is SpeedIndex.

I registered for a new free trial account and configured SpeedCurve to track both sites, and now we have a baseline to work from.

Currently this site's SpeedIndex is 0.8 seconds, which is decent, and only 0.3 seconds slower than Dries' site. However with these small numbers, this also means the site is 60% slower, which is a big gap to overcome.

To Come

In future posts I'll address some simple low-hanging fruit issues and measure their effects, and then dive deeper into specific browser issues and eventually get into some (probably unneeded) micro optimization to see if I can get a SpeedIndex value lower than dri.es.

Comments

You might minify your javascript file. Which saves 66kb before gzip. Would be another low hanging fruit. For comparison purposes webpagetest is also neat and insightful:

https://www.webpagetest.org/video/compare.php?tests=191111_5S_379a279e0…